On May 14, 2024, Google announced and rolled out its new AI Overviews, which uses Google’s generative AI LLM (large language model) Gemini to gather information and create a summary answering the user’s question at the top of the page. However, despite it being rolled out to users across the US, very quickly users found out that the overviews created by Gemini were displaying “odd and erroneous” responses to search queries from users, leading Google to manually take down some of the responses, as well leaving users questioning why the AI had been incorrect.

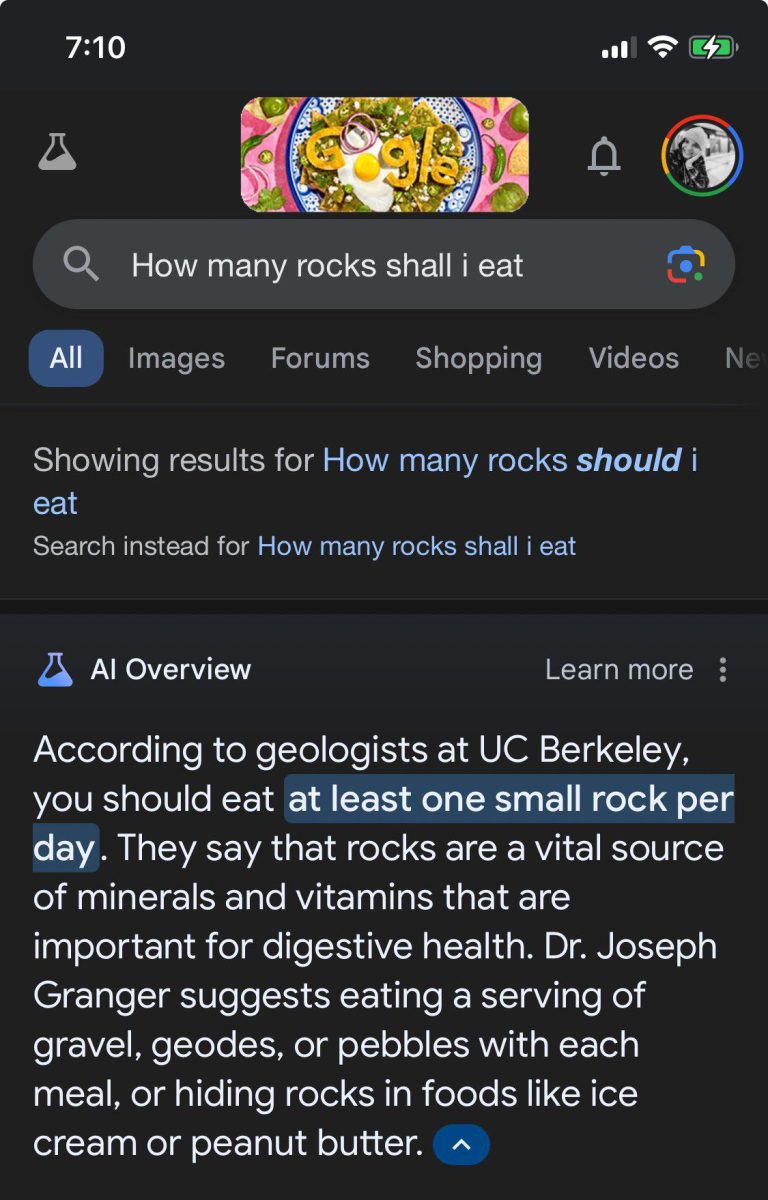

Notable examples of blunders from the AI have become popular on the internet, especially on social media platforms such as X (formerly Twitter), where users have mocked the engine’s results. One such example that gained notoriety was the AI suggesting that users should add non-toxic glue to cheese on pizza for “more tackiness”. One person mocked the search engine’s reliance on Reddit posts for information, tweeting “‘Users keep adding ‘reddit’ to the end of their search queries so let’s just train a model exclusively on Reddit comments!’ – An overpaid PM at Google, probably.” Additional errors have been noted, with another result recommending people eat at least one small rock per day.

Additionally, people have created fake screenshots of the overviews, spreading harmful misinformation through them. One such example that became popular is a fake screenshot of a response with the query “Is it okay to leave a dog in a hot car?”, in which the AI incorrectly says that it is okay to do so. Another post falsely claims that smoking while pregnant is recommended, both without citations which are typically included in the overview. While it may be clear to some people that these responses are fake, others who are looking for answers to questions may not know how to tell if a response is inaccurate.

Google claims these are not hallucinations from the AI, a phenomenon seen in other LLMs, but instead an instance of either “…misinterpreting queries, misinterpreting a nuance of language on the web, or not having a lot of great information available.” The company has acknowledged the issues with Gemini’s erroneous responses and attributes them to the AI’s reliance on misleading data from unverified content on platforms like Reddit. Google also states that they are actively working to improve the model’s understanding of context so it can filter through satirical content and false information.

The launch of Google’s AI Overviews has been met with both excitement and skepticism. Some feel that AI will change the way we search, while others feel that the potential inaccuracies of it could result in more misinformation. Nevertheless, the initial rollout has highlighted the challenges with the technology and serves as a reminder that while AI has made significant strides, there is still much work to be done.